BUNNY THE TALKING SHEEPADOODLE has 8.3 million followers on TikTok. In her videos, she ‘talks’ using an array of buttons laid out on interlocking puzzle-piece foam mats. There are nearly a hundred buttons, each one sounding a single English word like ‘play’ or ‘ball.’ In one video from two years ago, Bunny yips in her sleep. Her owner wakes her and asks, “what talk sleep?” Bunny seems to deliberate a bit, then finally mashes a paw on “stranger,” and then “animal.”

“Stranger animal” could be the only reportage humans have ever received from the realm of nonhuman dreams.1 It reminds me of those few grainy panoramas of a boulder-strewn Venus, beamed home by probes in the moments before incineration at the hands of the planet’s atmosphere. Each is a part of reality so far away that it can only be glimpsed briefly. When Bunny’s dream went viral, her audience seemed to recognize the thing this moment could mean.

NOW I GET NEWS from a seemingly alien consciousness every day, hour after hour, through social media. We are in this strange and very brief period—A year? Less?—when we can still see the seams and imperfections in AI art. The uncanny valley, it turns out, is not one vast basin to be traversed, but a place with many crags and crannies, some of them deep and weird.

When I rejoined Instagram four years ago, I let the app steer me toward great (human-made) CGI art. When AI art exploded last year, I let the app steer me toward that. My finds —meaning, the things the machine curates for me—are astounding. Here’re four:

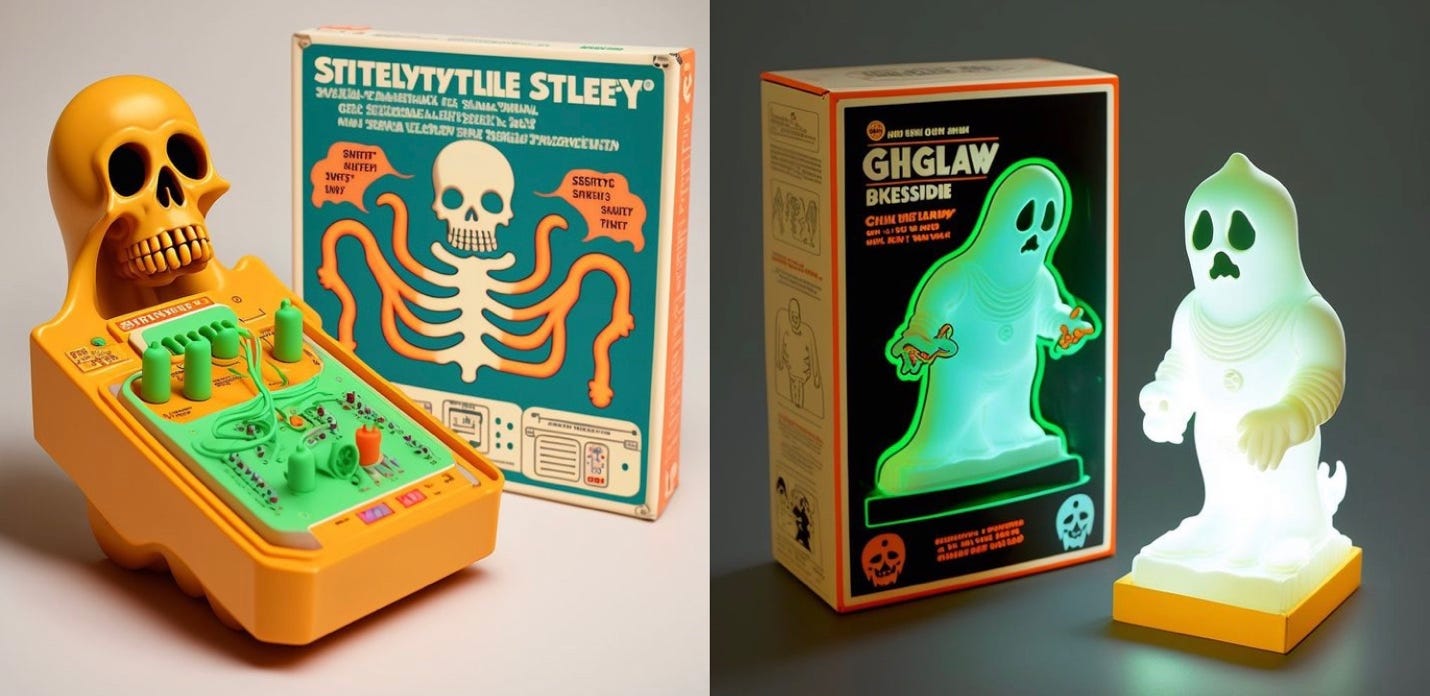

Creepmart showcases monster-themed toys from the specific era of my childhood, 1975-1985. “Nothing is real, nothing is for sale.” The gibberish text on the exquisite packaging gives the feel of expensive imports. Some of these pieces hurt to look at. Images this evocative will soon be marketed towards me, not just as 3D-printed gewgaws to purchase but as components of larger ad campaigns.2

Artificial Hotel tours hotels of the world, some deluxe, some austere, all inviting, none real. It’s like an endless virtual coffee table book of striking photos. Except it’s not “like” that, it more or less “is” that. Christ, I don’t even know where to put quotation marks anymore.

Tim Molloy, a gifted illustrator in the vein of Mœbius, built Legends of the Golden Child, a terrifying fictional show set inside a somehow more terrifying fictional world. His Lovecraft spaghetti western comes complete with posters, merch, production stills and its own written language. Really visceral, skin-crawly stuff. Two thumbs up (on the same hand).

The Timeout Zone shows shots from “a forgotten TV series,” in the vein of Twilight Zone or The Outer Limits. Seemingly real actors wear realistically hokey monster makeup. The furniture and lighting and hairstyles and expressions feel true to an anthology horror show from sixty years ago. If you’ve ever watched these shows, you understand how self-referentially creepy this is.

There are commonalities to each of these accounts, to most all AI art (a term I’m using to include AI photography).3 But every day it gets a little harder to figure them out. Obviously words and hands are big tells for this micro-era. But something’s off with the faces. Not all of them, but enough. They share an apprehensive stare, as if they all know something we, the real people, do not. If ChatGPT is Wikipedia for alternate universes, then these accounts feel like slideshows from those same worlds.

SOON, PROBABLY LATER THIS year, we’ll have streaming services from these worlds. Right now (early May, 2023) we have three types of new video mediums. The first type will probably have a fleetingly brief lifespan; characters immobilized except for blinks and slight head nods. An AI mashup of Breaking Bad and Balenciaga is a good example of this style. The characters’ limited mobility reminds me of the current crop of “animate your deceased relatives” apps (or Clutch Cargo cartoons from 1960).

The second type of video is created by Stable Diffusion (a learning algorithm) and Deforum (open-source animation software). Stable Diffusion Deforum re-imagines every frame of a modified CGI-film, each frame spinning or zooming on its X or Y axis as it goes. The result is dreamlike, a kinetic blur of constant change, one which can turn nightmare-like very quickly. Probably a blink-and-you’ll-miss-it genre. What replaces it will surely be far scarier.

The last type of new video is motion pictures. Plain old cinema, just like us people used to make, only now made by machines. This is happening as I write. Less than two weeks ago, a German filmmaker released a one and a half minute trailer for an AI film in the Baz Lurhmann style. It looks janky, but so did The Great Train Robbery. It will learn fast. Each AI film advance is itself a micro-genre, or at least a milli-genre. How long until the next big leap? A month? A week?

What is the smallest animal to dream? Surely the 39 trillion microbial life forms that live inside every human body—each individual member of the microbiome—don’t dream. But what about larger life forms? Certain spiders show signs of REM. Do Demodex mites dream as they nest at the base of our eyelashes?

People under emotional stress are more susceptible to marketing. Algorithms can detect inner states, concealed emotions, even physical disease (Israeli startup Vocalis Health uses “speech biomarkers” to detect Covid). Marketers will use these tools in ways we can’t currently imagine.

I’ve read a lot of confusion between NFT art and AI art. They’re very different things. NFT art is something close to a genre, one whose seemingly deliberate shittiness seems entwined with the transparent grift of its own medium. AI art has ‘tells’ but covers all genres. Ultimately, it could be anything.

Reminds me so much of two world bending pre-AI efforts: Jakub Różalski's The World of 1920+ on one hand, and on the other, that show from recent years,Tales From The Loop.